By Jack Davidson

One of the joys of programming is that there are so many different ways to tackle a problem, you may lean into specific paradigms like object oriented programming or data oriented design, you may make heavy use of specific design patterns as they provide a framework to ease development, testing or provide some form of modularity. All of these paths have their pros and cons, some we can measure and be objective about, some we have to let our experience guide us.

Unfortunately it is not a given that if you’re working on a team, each member is going to come to the same conclusion on how the problem should be solved. At the end of the day we have all accrued different experiences and lessons from our time honing our craft, this is especially true for those who come from different teams or projects who may not have worked together as long.

This can result in a lack of cohesion in a shared codebase, where different regions of the code are built using juxtaposed methodologies. Maybe at first this isn’t too much of an issue as they are isolated from each other, but at some point they can come into contact and these different methodologies that make sense on their own can create complex code where they meet. Maybe a programmer from one region is exposed to a new part of the codebase when tracking down performance issues or fixing a bug, and finds themselves lost, having to stop and take the time to build a new mental map of how the code is structured before they can make progress, slowing down development time.

We can minimise these risks by taking proactive steps to build alignment in the team. Processes such as reviewing technical designs before implementation; reviewing code after the fact, will help create that alignment over time, module to module. However, we think it is valuable to have that discussion of how we unify as developers on the current project up front. By doing so we can surface misalignment early, understand the compromises we can make and commit to how we as a team work and solve problems. Done right these discussions can speed up the process of reviewing technical designs or code by reducing the number of surprises that can come from this misalignment.

A tool we have used to have that discussion is what we refer to as Code Pillars. The process of creating these pillars provides a space for the team to think about alignment early and more importantly get communicating. I will not pretend this is some magic bullet that can solve all our woes, building alignment in the team requires constant attention and communication to maintain, however, code pillars can help get the ball rolling as well as providing a constant reference point to compare against, anchoring your team to an agreed approach.

What are Code Pillars?

Code pillars to us are an umbrella of core ideals that we agree as a team to work under and to use when planning, building and reviewing code. While they can help alignment during development, they can also provide an insight into the mindset of the team for anyone joining the team or for future engineers after the project, if it is ever revisited.

It is important that code pillars are driven by the team, describing what the team prioritises when solving problems; by focussing on their priorities we can get the buy-in from the team necessary to reach alignment. These priorities may include but are not limited to:

How to think about the problem.

How we structure our code and data.

How the team wants to personally grow and learn as individuals.

How the codebase can best support the team.

Code Pillars are separate from coding standards, they are not hard rules carved in stone that focus on the grammar of what we do but instead tend to be more high level, to help guide us in reaching a solution that is expected by our team, a solution that our colleagues would have also arrived at, more or less, given the same problem. It should be noted that the pillars should be flexible and ready to change when the priorities of the team change.

While Code Pillars are driven by the team and can encapsulate a lot of the team's personal ambitions for development of software, it is important that the team always keeps in mind that code is a means to an end, and that we do not work in isolation. We have to consider the needs of the product, the other disciplines and the eventual users at each step.

With that overview, let’s step through each point and provide some examples of code pillars we have used in different projects to provide some context.

You may agree with some, you may disagree with others. That's fine, your team's code pillars should be a reflection of what's important to your team and the problems you’re tackling in your project, not ours. My goal here is to get you thinking about what a code pillar can be.

How to think about the problem

We have used the pillar “Solve actual problems - no more, no less” to keep the team focussed on what we know within reasonable certainty, to try to minimise unnecessary speculation and avoid complicated general purpose solutions to simple problems. As a team we all know that desire to create a solution that works for as many cases as we can think of, it can be a challenging and interesting problem, much more fun than a simple function. However, we wanted to keep in mind the likelihood that we need a solution for these cases and consider what we are sacrificing in terms of readability and performance to have that one-size-fits-all system.

Another pillar in this category is “Different problems require different solutions” admitting that when there are large design changes that should be reflected in the code. Our code should be tailored to the problem we are trying to solve and in doing so we can benefit from optimising to its constraints.

How we structure our code and data

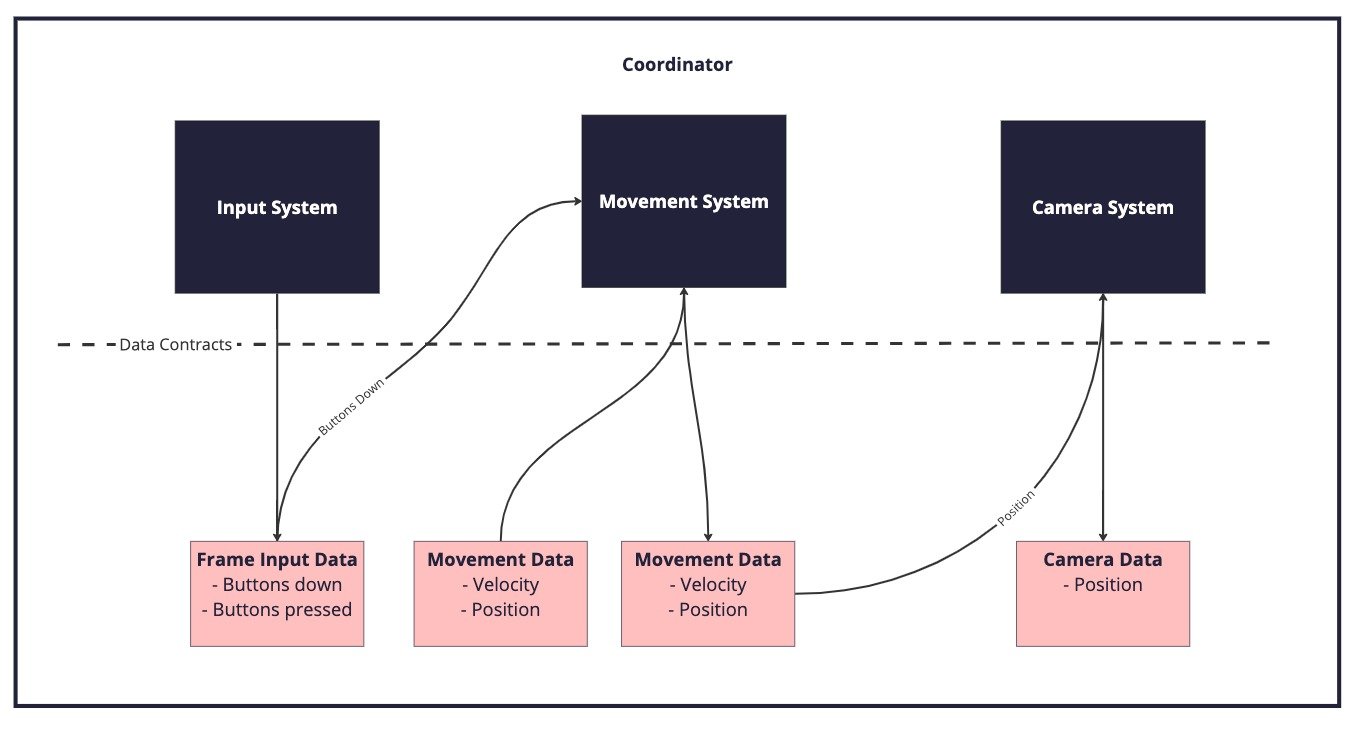

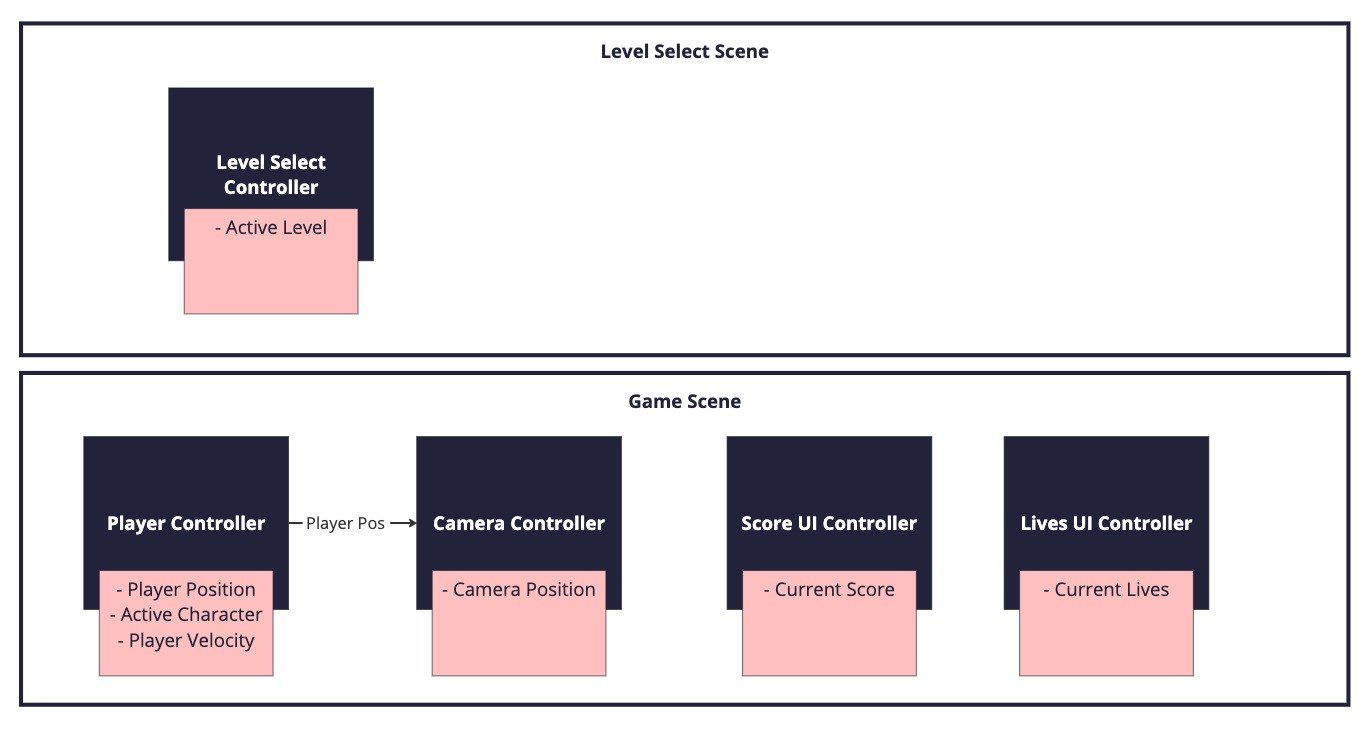

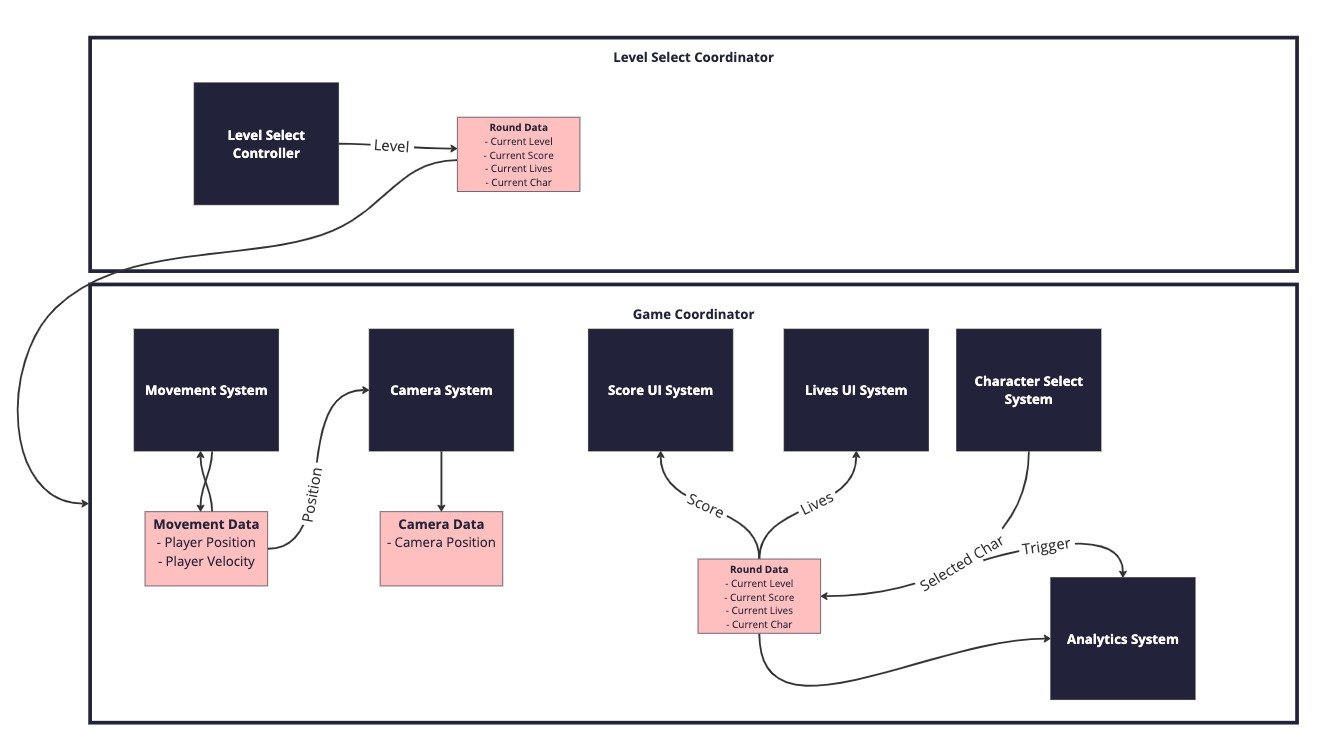

A good example was “Don’t squirrel away data”, we wanted to avoid systems owning too much data, and instead push data down into systems as needed. We admitted as a team we didn’t know where data would be needed later in development and getting it wrong can impact how easy the codebase is to work with. In the past we tried having each system own its own data which in turn led us to build bridges between systems and couple them to access that data later. By agreeing up front that the accessibility of data was important to managing the relationship between systems, we did not have to worry how we could access data when the design of a system changed and required something new or when we needed to strip out a system from the pipeline.

A favourite of mine is that code should focus on “Debuggability”, as in to avoid patterns that make it difficult to know what is happening when something breaks. For example opt for polling over callbacks as it allows for you to walk through the code and see why a block isn’t executing. You don’t always know where the bug has come from and you can’t always fall back to the original author to find the problem.

The pillar “Code to last” was a lesson hard learned during prototyping. Eager to move quickly and test out as many ideas as we could, myself and a few other junior engineers took shortcuts and hacked things together. This is fine we told ourselves, this project will only last a month, oh how naive we were. That project lasted 3 months as new ideas were generated that built on what came before. We soon ended up in a position where the hacks we had used to speed up development were crippling our velocity in later months. With the next project we wanted to manage that velocity better, to maintain a pace that might be slower than that initial run of hacks but was consistent and reliable, so we used “Code to last” as our guiding light while prototyping. This doesn’t mean writing prototypes to a production standard but acknowledging the balance required between flexibility needed to iterate and creating that stable foundation that we can continue to build on, as our code will live longer than we expect.

How the team wants to personally grow and learn as individuals

In a previous project I worked on, a few of the engineers were keen to learn about Data-oriented design and how to accomplish this through the use of Unity’s Job system. By surfacing this through the code pillars process the team were able to have that conversation up front, and as a group evaluate the potential, the risks and where possible a space can be made to drive forward that learning where it makes sense for the project.

In this case while Unity Jobs was a good fit for the type of game we were making, the timeline of the project and our unfamiliarity with the Jobs system and Data-oriented design however led us to the pillar “Open to using Unity.Jobs - Group data where it’s used most”. We didn’t commit to using Unity Jobs yet but wanted to take steps towards learning about these concepts by making sure we as a group structured our code and more importantly data in an appropriate manner. In doing so we could get a feel for the challenges and benefits of data-oriented design; while leaving the door open to easily slot Unity.Jobs in later down the line, to investigate its use and impact on performance when time allowed.

How the codebase can best support the team

This example was a direct result of the code team coming from a project where we felt we dropped the ball, that we made things unnecessarily difficult or time consuming for other disciplines and ourselves to work in the editor. So we agreed as a group “Code decisions should empower other disciplines”, we wanted to focus on fast game startup, and minimising iteration time when working with data and assets. The more we as creatives can iterate the better the final outcome.

How we define our code pillars!

Hopefully by this point you see some of the value in creating your own pillars to help align and guide the team - maybe you even have a couple of ideas. Let’s discuss how we go about actually constructing our pillars to ensure they are memorable, useful and usable.

This process should start with each new project or change in direction, take your previous code pillars with you as reference but you don’t have to stick with them. Your new project or direction might have new priorities, maybe as you and your colleagues have grown the pillars you want to work toward have changed. Take the time to understand what is important to your team now and going forward.

As I’ve said already it’s vital that we get everyone involved, if we all have a stake in their creation, it can get everyone invested in making it work. I’ve enjoyed projects where this process was easy, we may have started with some misalignment but through the discussion we got excited by the potential ahead and were forged into a much stronger team. I’ve also delighted in projects where this process of building alignment was filled with more lively debate, while we were all made better by these discussions there came a point where we needed to agree to disagree, and commit. It’s at this stage you need to rely on the critical mass and the key figures in your team being onboard, otherwise you lose any buy-in and it will be doomed to fail.

What this looks like at scale in a much larger team, I’m not sure yet, but I look forward to tackling the problem and learning how this applies.

When it comes to constructing your code pillars, it can be useful to look back on your previous project. Consider what worked, what problems you ran into, can your code pillars help mitigate these problems while shoring up what was successful?

Ask your team a few questions to get the conversation going, a good starting point is how many pillars should we have? Too many and they become hard to remember, too few and you might miss what gets people excited. The likelihood that everything can be covered by one set of pillars is slim, so some tough choice may lie ahead to determine what is the priority for the team and the project. For actually understanding the teams priorities, I’ve tried asking

What do we want to achieve as programmers?

What do we want to learn?

How do we want to structure our code and data?

How can we best support other disciplines?

What are the key product risks we are looking to mitigate?

While these are more generic questions that could apply to any project, it is important to remember your current project may have unique problems that need to be a focus of the team. For example, in a project targeting console platforms we also asked “Should certification drive some of our pillars?”. These questions should set us up to concentrate on what’s important for this project and the team.

We opt to start by brainstorming to discover what the team finds important, collecting similar themes together before voting on our highest priorities. We’ve found techniques such as dot voting to be particularly useful in narrowing down to a short list, you may even wish to give weight to votes from senior members of the team.

The next step is to format these pillars into something that is easy and quick to reference, so as to refresh our memories. A snappy headline that when repeated in a document or in a meeting the team knows what it means, however, a headline isn’t enough, we all forget and new members to the team will have missed any conversations that provide context. Follow through with some bullet points or paragraphs to flesh out the concept. Essentially it is important for us to capture the necessary information for the reader to understand what the pillar is, why it's important and how they can achieve its goal.

With everything formatted, some gorgeous posters lining the office walls, the team is aligned! … If only. At the end of the day Code Pillars are a jumping off point, to get the team thinking about how we stay aligned. It is important that the team refers back to them regularly. When discussing solutions to a problem they can be used as an evaluation criteria to decide the best solution for the project. When reviewing code they can be used as a marker to understand if code meets the expectations of the team. By keeping them in the conversation at each stage in the code development lifecycle, they will piece by piece be internalised in how we develop software.

As a team it's necessary to try and understand if the pillars are still relevant as time goes on. Understanding if the code the team is creating has started to diverge from the pillars, can be a rough measure of the team's alignment. It's worth highlighting this disparity with the team, the result may be that they want to continue with the existing pillars, or they may decide that there are new priorities that should take precedence.

It may be that the team has stuck to the pillars to the point they become second nature, the conversation might turn to are there any new pillars that the team can strive towards to continue to improve.

It is likely that the context of the project has changed as time has progressed, does the team's priorities still allow for the project to achieve its potential or do they go counter to the current direction?

However the situation, checking in and having these conversations is an important part of maintaining that alignment.

Building alignment in a team is no easy task, especially as teams grow larger and the deadlines get closer. We’ve certainly not perfected it, code pillars are not a silver bullet that's going to do all the work for you, they can only ever be high level guidelines. There is potential for there to be misalignment over how to achieve the pillars, which will only show as code is developed. When this happens it's a sign to circle back and have that discussion of what it means, how it applies and if this is a case to bend the rules. As with any disagreements it’s a learning opportunity for everyone involved and we should all aim to come in with an open mind to how others frame the problem, its constraints and how those paired with their interpretation of the pillars lead to a solution.

This process and its ability to make stronger teams is something I am passionate about, so I am very keen to hear if anyone goes through this process or something similar - what were your pillars and are they helping?